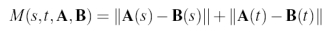

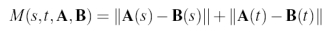

I implemented a Graphcut algorithm for image compositing. The algorithm represents an image as a connected graph with edge weights based on the difference between neighboring pixels. In my implementation, I used the following edge weight:

Additionally, each node has an arc to nodes A and B (which represent the source and target images). If we constrain an pixel to come from image A, we set the weight of the arc from that pixel to A to be near infinite.

Solving the max flow / min cut problem on this graph yields an edge where the colors in both images are similar.

I found that the metric I used made the resulting composite image very sensitive to the constraints I placed on it. To place better constraints, I chose to show the pixels constrained to come from the source image in the target image.

|  |

| Source | Target | Mask |

|---|---|---|

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

| Source | Target | Mask |

|  |  |

| Composite | Poisson | Poisson w/ Transparency |

|  |  |

Despite my attempts to improve the composite image quality by placing tighter constraints, the algorithm included unwanted blocks of pixels from the source images. This is apparent in the jet, bear, and Godzilla images. When I did not extend the target mask quite as close to the source mask, the composites were even worse. The algorithm made the mask of the moon a little too small, which may be due to the moon's shadows being close in value to the background. The composite image of the building from two different viewpoints was largely a success. The edge between images seems to follow the contours of the bricks. It seems that source images with small gaps between details are not handled well by the algorithm. For example, the trees on the left and the American flag in the Statue of Liberty image are desired in the composite with the tornado image. However, the algorithm has also placed the pixels in between them into the composite, which is not desired behavior. Similarly, Godzilla's spines have a "halo" of background pixels around them in the composite. In other examples, small details that are not constrained to come from the source are ommited. Sections of the jet's cone are missing, as is Lady Liberty's torch. A Poisson blend helps hide some of these issus. In no case was it beneficial to use the Poisson blend with transparency. It is likely that some of the flaws in the algorithm are due to my choice of metric, and this requires further investigation.